|

Prakamya Mishra I'm an applied research engineer at AMD AI in Seattle, where I am part of the GenAI team lead by Zicheng Liu, working on novel techniques to efficiently train LLMs & image/video generation AI models on large-scale clusters. Previously, I was a graduate researcher in BioNLP Lab at UMass Amherst (Mentors: Hong Yu & Zonghai Yao), graduate research with Amazon Science NLU Team (Mentor: Mayank Kulkarni), and an applied scientist intern at Amazon Science SLU Team (Mentors: Abhishek Malali & Masha Belyi). I did my Masters in Computer Science from UMass Amherst. |

|

ResearchI'm interested in natural language processing, deep learning, and generative AI. Most of my research is about language models, representation learning and applications of NLP. |

|

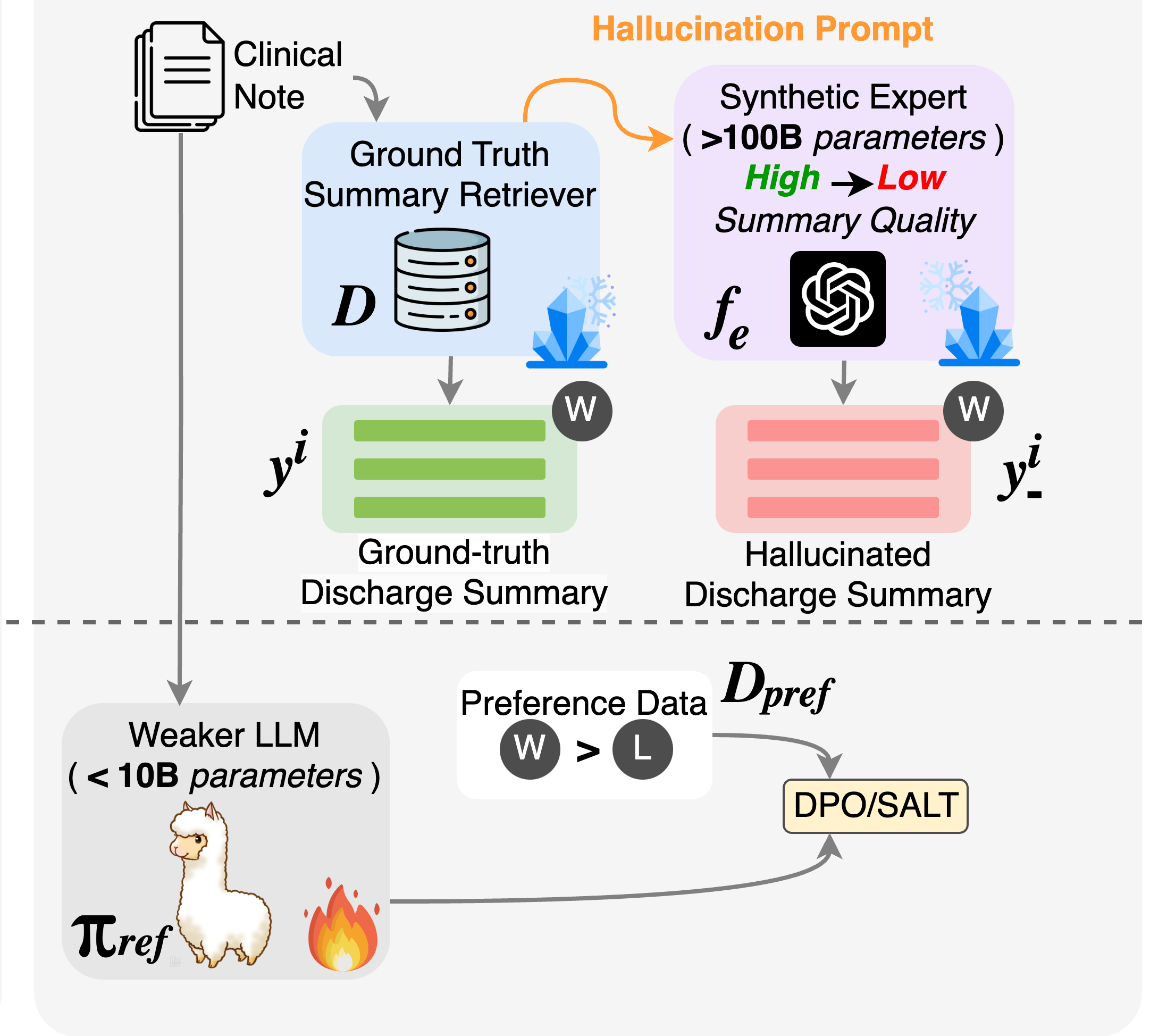

SYNFAC-EDIT: Synthetic Imitation Edit Feedback for Factual Alignment in Clinical Summarization

Prakamya Mishra*, Zonghai Yao*, Parth Vashisht, Feiyun Ouyang, Beining Wang, Vidhi Dhaval Mody, Hong Yu arXiv, 2024 arXiv This study leverages synthetic edit feedback to improve factual accuracy in clinical summarization using DPO and SALT techniques. Our approach demonstrates the effectiveness of GPT-generated edits in enhancing the reliability of clinical NLP applications. |

|

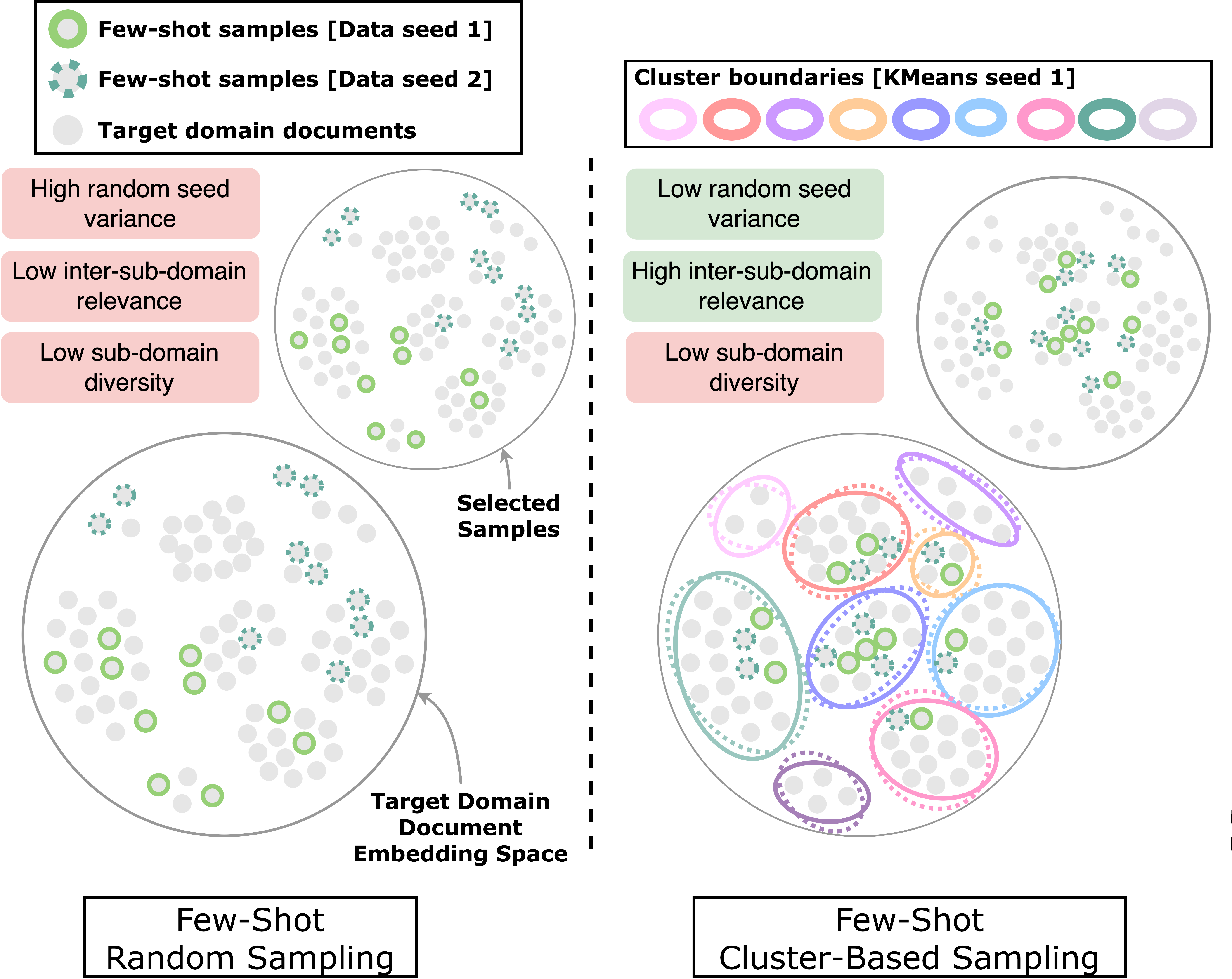

Clustering-based sampling for few-shot cross-domain keyphrase extraction

Prakamya Mishra*, Lincy Pattanaik*, Arunima Sundar*, Nishant Yadav, Mayank Kulkarni Findings at, EACL 2024 Paper We propose a novel clustering-based few-shot sampling approach that leverages intrinsically available sub-domain information as topics from the dataset to extract few-shot samples to be labelled from the target domains and be used for fine-tuning. |

|

|

Synthetic Imitation Edit Feedback for Factual Alignment in Clinical Summarization

Prakamya Mishra*, Zonghai Yao*, Shuwei Chen, Beining Wang, Rohan Mittal, Hong Yu SyntheticData4ML workshop at, NeurIPS 2023 Paper In this work, we propose a new pipeline using ChatGPT instead of human experts to generate high-quality feedback data for improving factual consistency in the clinical note summarization task. |

|

|

STEPs-RL: Speech-Text Entanglement for Phonetically Sound Representation Learning

Prakamya Mishra Long paper at, PAKDD 2021 (Oral Presentation) Paper In this work, we present a novel multi-modal deep neural network architecture that uses speech and text entanglement for learning phonetically sound spoken-word representations. |

|

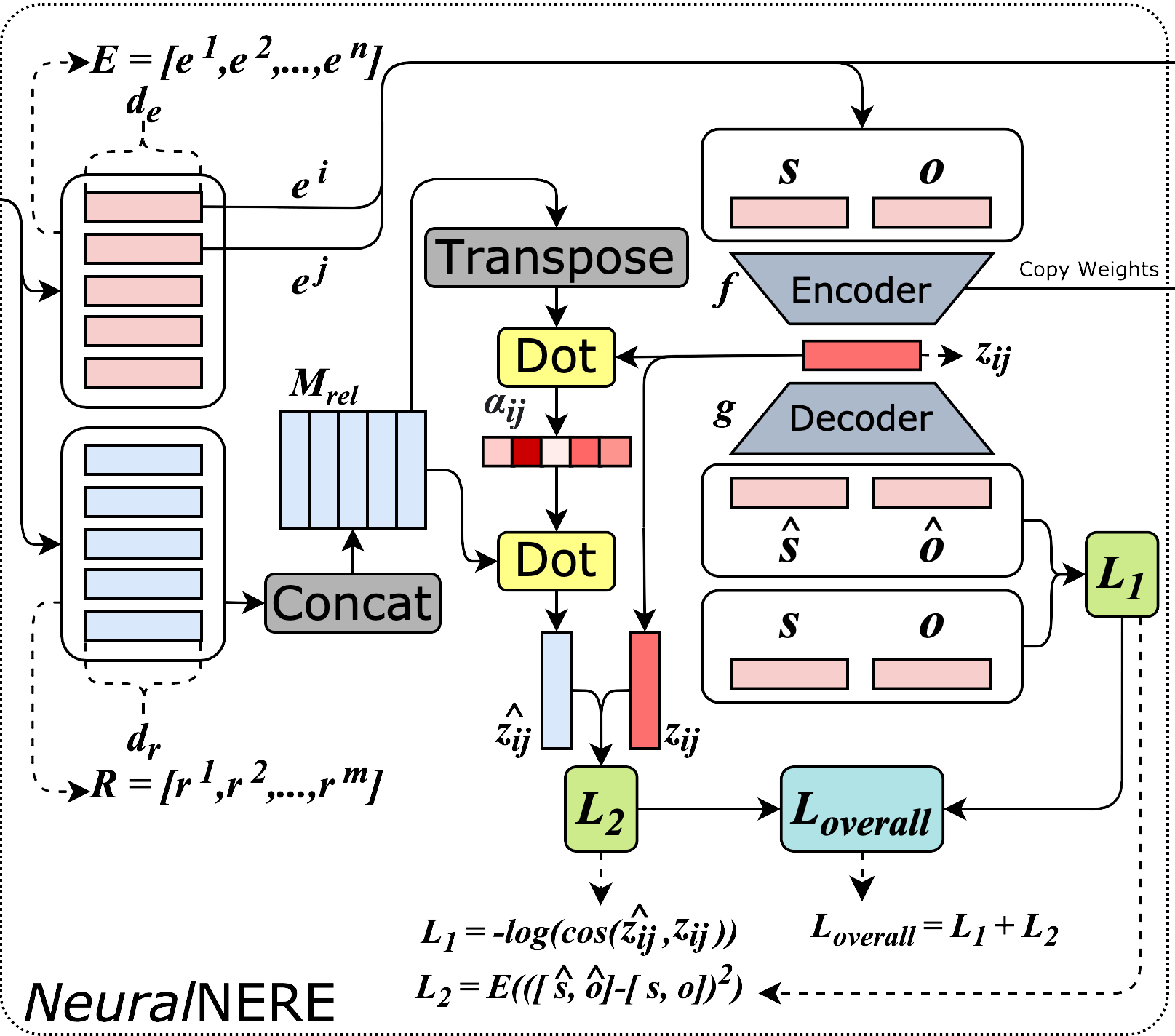

NeuralNERE: Neural Named Entity Relationship Extraction for End-to-End Climate Change Knowledge Graph Construction

Prakamya Mishra, Rohan Mittal Tackling Climage Change using Machine Learning workshop at, ICML 2021 (Spotligh Presentation) Paper / Presentation We propose NeuralNERE, an end-to-end Neural Named Entity Relationship Extraction model for constructing climate change knowledge graphs directly from the raw text of relevant news articles. Additionally, we introduce a new climate change news dataset (called SciDCC dataset) containing over 11k news articles scraped from the Science Daily website. |

|

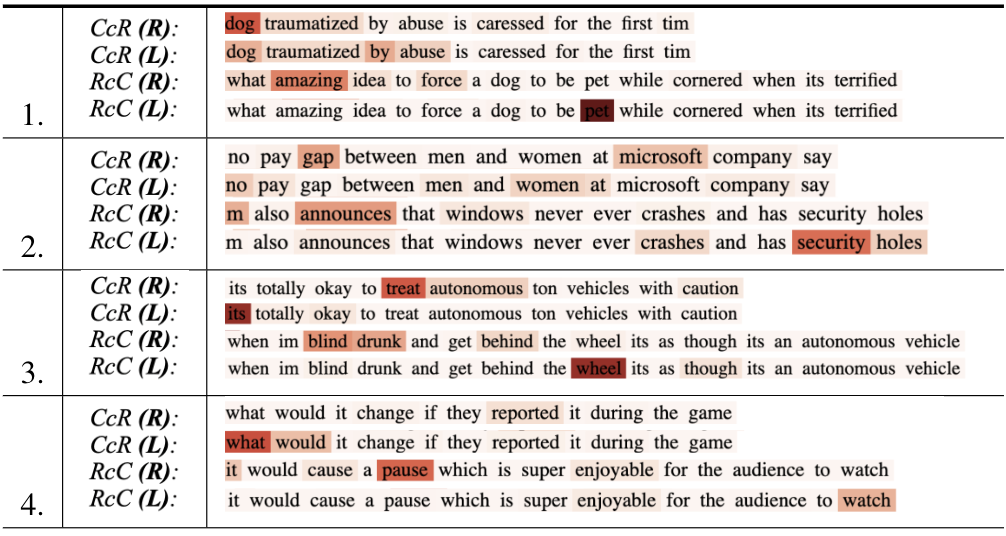

Bi-ISCA: Bidirectional Inter-Sentence Contextual Attention Mechanism for Detecting Sarcasm in User Generated Noisy Short Text

Prakamya Mishra, Saroj Kaushik, Kuntal Dey MRC-HCCS wrokshop at, IJCAI 2021 Paper Developed novel Bi-directional Inter-Sentence Contextual Attention mechanism (Bi-ISCA) to capture inter-sentence dependencies for detecting sarcasm. Explained model behaviors and predictions by analyzing the attention maps and identifying words responsible for invoking sarcasm. |

Miscellanea |

|

Reviewer, EACL SRW 2024

Reviewer, EMNLP 2024 |

|

Design and source code from Jon Barron's website. |